The computer revolution: how it's changed our world over 60 years

![computer revolution assignment The BlueGene/L supercomputer is presented to the [media] at the Lawerence Livermore National Laboratory in Livermore, California, October 27, 2005. The BlueGene/L is the world's fastest supercomputer and will be used to ensure [U.S. nuclear weapons stockpile] is safe and reliable without testing. The BlueGene/L computer made by IBM can perform a record 280.6 trillion operations per second.](https://assets.weforum.org/article/image/large_2JLMYGrCYRb1GpPLelIHRm1J15hLVXSttNz3qV_Gu54.jpg)

The BlueGene/L supercomputer can perform 280.6 trillion operations per second. Image: REUTERS/KimberlyWhite

.chakra .wef-1c7l3mo{-webkit-transition:all 0.15s ease-out;transition:all 0.15s ease-out;cursor:pointer;-webkit-text-decoration:none;text-decoration:none;outline:none;color:inherit;}.chakra .wef-1c7l3mo:hover,.chakra .wef-1c7l3mo[data-hover]{-webkit-text-decoration:underline;text-decoration:underline;}.chakra .wef-1c7l3mo:focus,.chakra .wef-1c7l3mo[data-focus]{box-shadow:0 0 0 3px rgba(168,203,251,0.5);} Justin Zobel

.chakra .wef-9dduvl{margin-top:16px;margin-bottom:16px;line-height:1.388;font-size:1.25rem;}@media screen and (min-width:56.5rem){.chakra .wef-9dduvl{font-size:1.125rem;}} Explore and monitor how .chakra .wef-15eoq1r{margin-top:16px;margin-bottom:16px;line-height:1.388;font-size:1.25rem;color:#F7DB5E;}@media screen and (min-width:56.5rem){.chakra .wef-15eoq1r{font-size:1.125rem;}} Digital Communications is affecting economies, industries and global issues

.chakra .wef-1nk5u5d{margin-top:16px;margin-bottom:16px;line-height:1.388;color:#2846F8;font-size:1.25rem;}@media screen and (min-width:56.5rem){.chakra .wef-1nk5u5d{font-size:1.125rem;}} Get involved with our crowdsourced digital platform to deliver impact at scale

Stay up to date:, digital communications.

It is a truism that computing continues to change our world. It shapes how objects are designed, what information we receive, how and where we work, and who we meet and do business with. And computing changes our understanding of the world around us and the universe beyond.

For example, while computers were initially used in weather forecasting as no more than an efficient way to assemble observations and do calculations, today our understanding of weather is almost entirely mediated by computational models.

Another example is biology. Where once research was done entirely in the lab (or in the wild) and then captured in a model, it often now begins in a predictive model, which then determines what might be explored in the real world.

The transformation that is due to computation is often described as digital disruption . But an aspect of this transformation that can easily be overlooked is that computing has been disrupting itself.

Evolution and revolution

Each wave of new computational technology has tended to lead to new kinds of systems, new ways of creating tools, new forms of data, and so on, which have often overturned their predecessors. What has seemed to be evolution is, in some ways, a series of revolutions.

But the development of computing technologies is more than a chain of innovation – a process that’s been a hallmark of the physical technologies that shape our world.

For example, there is a chain of inspiration from waterwheel, to steam engine, to internal combustion engine. Underlying this is a process of enablement. The industry of steam engine construction yielded the skills, materials and tools used in construction of the first internal combustion engines.

In computing, something richer is happening where new technologies emerge, not only by replacing predecessors, but also by enveloping them. Computing is creating platforms on which it reinvents itself, reaching up to the next platform.

Getting connected

Arguably, the most dramatic of these innovations is the web. During the 1970s and 1980s, there were independent advances in the availability of cheap, fast computing, of affordable disk storage and of networking.

Compute and storage were taken up in personal computers, which at that stage were standalone, used almost entirely for gaming and word processing. At the same time, networking technologies became pervasive in university computer science departments, where they enabled, for the first time, the collaborative development of software.

This was the emergence of a culture of open-source development, in which widely spread communities not only used common operating systems, programming languages and tools, but collaboratively contributed to them.

As networks spread, tools developed in one place could be rapidly promoted, shared and deployed elsewhere. This dramatically changed the notion of software ownership, of how software was designed and created, and of who controlled the environments we use.

The networks themselves became more uniform and interlinked, creating the global internet, a digital traffic infrastructure. Increases in computing power meant there was spare capacity for providing services remotely.

The falling cost of disk meant that system administrators could set aside storage to host repositories that could be accessed globally. The internet was thus used not just for email and chat forums (known then as news groups) but, increasingly, as an exchange mechanism for data and code.

This was in strong contrast to the systems used in business at that time, which were customised, isolated, and rigid.

With hindsight, the confluence of networking, compute and storage at the start of the 1990s, coupled with the open-source culture of sharing, seems almost miraculous. An environment ready for something remarkable, but without even a hint of what that thing might be.

The ‘superhighway’

It was to enhance this environment that then US Vice President Al Gore proposed in 1992 the “ information superhighway ”, before any major commercial or social uses of the internet had appeared.

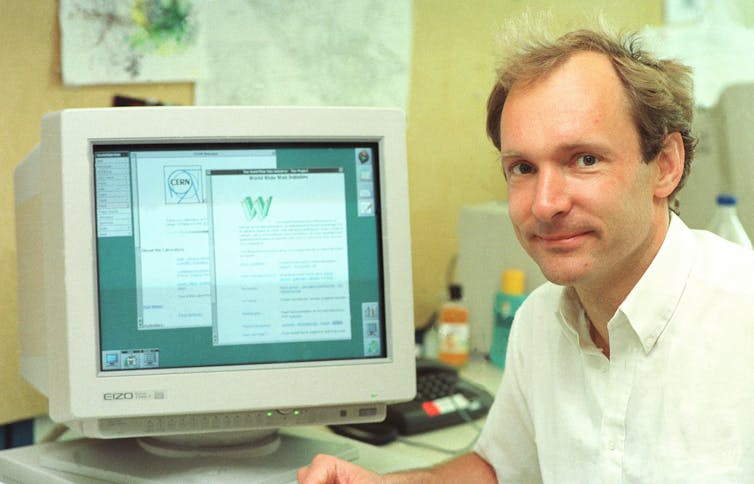

Meanwhile, in 1990, researchers at CERN, including Tim Berners-Lee , created a system for storing documents and publishing them to the internet, which they called the world wide web .

As knowledge of this system spread on the internet (transmitted by the new model of open-source software systems), people began using it via increasingly sophisticated browsers. They also began to write documents specifically for online publication – that is, web pages.

As web pages became interactive and resources moved online, the web became a platform that has transformed society. But it also transformed computing.

With the emergence of the web came the decline of the importance of the standalone computer, dependent on local storage.

We all connect

The value of these systems is due to another confluence: the arrival on the web of vast numbers of users. For example, without behaviours to learn from, search engines would not work well, so human actions have become part of the system.

There are (contentious) narratives of ever-improving technology, but also an entirely unarguable narrative of computing itself being transformed by becoming so deeply embedded in our daily lives.

This is, in many ways, the essence of big data. Computing is being fed by human data streams: traffic data, airline trips, banking transactions, social media and so on.

The challenges of the discipline have been dramatically changed by this data, and also by the fact that the products of the data (such as traffic control and targeted marketing) have immediate impacts on people.

Software that runs robustly on a single computer is very different from that with a high degree of rapid interaction with the human world, giving rise to needs for new kinds of technologies and experts, in ways not evenly remotely anticipated by the researchers who created the technologies that led to this transformation.

Decisions that were once made by hand-coded algorithms are now made entirely by learning from data. Whole fields of study may become obsolete.

The discipline does indeed disrupt itself. And as the next wave of technology arrives (immersive environments? digital implants? aware homes?), it will happen again.

Don't miss any update on this topic

Create a free account and access your personalized content collection with our latest publications and analyses.

License and Republishing

World Economic Forum articles may be republished in accordance with the Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International Public License, and in accordance with our Terms of Use.

The views expressed in this article are those of the author alone and not the World Economic Forum.

The Agenda .chakra .wef-n7bacu{margin-top:16px;margin-bottom:16px;line-height:1.388;font-weight:400;} Weekly

A weekly update of the most important issues driving the global agenda

.chakra .wef-1dtnjt5{display:-webkit-box;display:-webkit-flex;display:-ms-flexbox;display:flex;-webkit-align-items:center;-webkit-box-align:center;-ms-flex-align:center;align-items:center;-webkit-flex-wrap:wrap;-ms-flex-wrap:wrap;flex-wrap:wrap;} More on Fourth Industrial Revolution .chakra .wef-17xejub{-webkit-flex:1;-ms-flex:1;flex:1;justify-self:stretch;-webkit-align-self:stretch;-ms-flex-item-align:stretch;align-self:stretch;} .chakra .wef-zh0r2a{display:-webkit-inline-box;display:-webkit-inline-flex;display:-ms-inline-flexbox;display:inline-flex;white-space:normal;vertical-align:middle;padding-left:0px;padding-right:0px;text-transform:uppercase;font-size:0.75rem;border-radius:0.25rem;font-weight:700;-webkit-align-items:center;-webkit-box-align:center;-ms-flex-align:center;align-items:center;line-height:1.2;-webkit-letter-spacing:1.25px;-moz-letter-spacing:1.25px;-ms-letter-spacing:1.25px;letter-spacing:1.25px;background:none;padding:0px;color:#B3B3B3;-webkit-box-decoration-break:clone;box-decoration-break:clone;-webkit-box-decoration-break:clone;}@media screen and (min-width:37.5rem){.chakra .wef-zh0r2a{font-size:0.875rem;}}@media screen and (min-width:56.5rem){.chakra .wef-zh0r2a{font-size:1rem;}} See all

California AI bill blocked by governor, and other digital technology stories you need to know

October 23, 2024

How AI is revolutionizing Earth observation

Bruno Sánchez-Andrade Nuño

October 21, 2024

AI value alignment: How we can align artificial intelligence with human values

Benjamin Larsen and Virginia Dignum

October 17, 2024

Why global collaboration on equitable advanced air mobility is key to unlocking opportunities for all

Justine Johnson and Kerissa Khan

October 16, 2024

How equitable data practices can shape the future of urban planning

Jacqueline Lu and Jeff Merritt

October 15, 2024

Generative AI is rapidly evolving: How governments can keep pace

Karla Yee Amezaga, Rafi Lazerson and Manal Siddiqui

October 11, 2024

History of computers: A brief timeline

The history of computers began with primitive designs in the early 19th century and went on to change the world during the 20th century.

- 2000-present day

Additional resources

The history of computers goes back over 200 years. At first theorized by mathematicians and entrepreneurs, during the 19th century mechanical calculating machines were designed and built to solve the increasingly complex number-crunching challenges. The advancement of technology enabled ever more-complex computers by the early 20th century, and computers became larger and more powerful.

Today, computers are almost unrecognizable from designs of the 19th century, such as Charles Babbage's Analytical Engine — or even from the huge computers of the 20th century that occupied whole rooms, such as the Electronic Numerical Integrator and Calculator.

Here's a brief history of computers, from their primitive number-crunching origins to the powerful modern-day machines that surf the Internet, run games and stream multimedia.

19th century

1801: Joseph Marie Jacquard, a French merchant and inventor invents a loom that uses punched wooden cards to automatically weave fabric designs. Early computers would use similar punch cards.

1821: English mathematician Charles Babbage conceives of a steam-driven calculating machine that would be able to compute tables of numbers. Funded by the British government, the project, called the "Difference Engine" fails due to the lack of technology at the time, according to the University of Minnesota .

1848: Ada Lovelace, an English mathematician and the daughter of poet Lord Byron, writes the world's first computer program. According to Anna Siffert, a professor of theoretical mathematics at the University of Münster in Germany, Lovelace writes the first program while translating a paper on Babbage's Analytical Engine from French into English. "She also provides her own comments on the text. Her annotations, simply called "notes," turn out to be three times as long as the actual transcript," Siffert wrote in an article for The Max Planck Society . "Lovelace also adds a step-by-step description for computation of Bernoulli numbers with Babbage's machine — basically an algorithm — which, in effect, makes her the world's first computer programmer." Bernoulli numbers are a sequence of rational numbers often used in computation.

1853: Swedish inventor Per Georg Scheutz and his son Edvard design the world's first printing calculator. The machine is significant for being the first to "compute tabular differences and print the results," according to Uta C. Merzbach's book, " Georg Scheutz and the First Printing Calculator " (Smithsonian Institution Press, 1977).

1890: Herman Hollerith designs a punch-card system to help calculate the 1890 U.S. Census. The machine, saves the government several years of calculations, and the U.S. taxpayer approximately $5 million, according to Columbia University Hollerith later establishes a company that will eventually become International Business Machines Corporation ( IBM ).

Early 20th century

1931: At the Massachusetts Institute of Technology (MIT), Vannevar Bush invents and builds the Differential Analyzer, the first large-scale automatic general-purpose mechanical analog computer, according to Stanford University .

1936: Alan Turing , a British scientist and mathematician, presents the principle of a universal machine, later called the Turing machine, in a paper called "On Computable Numbers…" according to Chris Bernhardt's book " Turing's Vision " (The MIT Press, 2017). Turing machines are capable of computing anything that is computable. The central concept of the modern computer is based on his ideas. Turing is later involved in the development of the Turing-Welchman Bombe, an electro-mechanical device designed to decipher Nazi codes during World War II, according to the UK's National Museum of Computing .

1937: John Vincent Atanasoff, a professor of physics and mathematics at Iowa State University, submits a grant proposal to build the first electric-only computer, without using gears, cams, belts or shafts.

1939: David Packard and Bill Hewlett found the Hewlett Packard Company in Palo Alto, California. The pair decide the name of their new company by the toss of a coin, and Hewlett-Packard's first headquarters are in Packard's garage, according to MIT .

1941: German inventor and engineer Konrad Zuse completes his Z3 machine, the world's earliest digital computer, according to Gerard O'Regan's book " A Brief History of Computing " (Springer, 2021). The machine was destroyed during a bombing raid on Berlin during World War II. Zuse fled the German capital after the defeat of Nazi Germany and later released the world's first commercial digital computer, the Z4, in 1950, according to O'Regan.

1941: Atanasoff and his graduate student, Clifford Berry, design the first digital electronic computer in the U.S., called the Atanasoff-Berry Computer (ABC). This marks the first time a computer is able to store information on its main memory, and is capable of performing one operation every 15 seconds, according to the book " Birthing the Computer " (Cambridge Scholars Publishing, 2016)

1945: Two professors at the University of Pennsylvania, John Mauchly and J. Presper Eckert, design and build the Electronic Numerical Integrator and Calculator (ENIAC). The machine is the first "automatic, general-purpose, electronic, decimal, digital computer," according to Edwin D. Reilly's book "Milestones in Computer Science and Information Technology" (Greenwood Press, 2003).

1946: Mauchly and Presper leave the University of Pennsylvania and receive funding from the Census Bureau to build the UNIVAC, the first commercial computer for business and government applications.

1947: William Shockley, John Bardeen and Walter Brattain of Bell Laboratories invent the transistor . They discover how to make an electric switch with solid materials and without the need for a vacuum.

1949: A team at the University of Cambridge develops the Electronic Delay Storage Automatic Calculator (EDSAC), "the first practical stored-program computer," according to O'Regan. "EDSAC ran its first program in May 1949 when it calculated a table of squares and a list of prime numbers ," O'Regan wrote. In November 1949, scientists with the Council of Scientific and Industrial Research (CSIR), now called CSIRO, build Australia's first digital computer called the Council for Scientific and Industrial Research Automatic Computer (CSIRAC). CSIRAC is the first digital computer in the world to play music, according to O'Regan.

Late 20th century

1953: Grace Hopper develops the first computer language, which eventually becomes known as COBOL, which stands for COmmon, Business-Oriented Language according to the National Museum of American History . Hopper is later dubbed the "First Lady of Software" in her posthumous Presidential Medal of Freedom citation. Thomas Johnson Watson Jr., son of IBM CEO Thomas Johnson Watson Sr., conceives the IBM 701 EDPM to help the United Nations keep tabs on Korea during the war.

1954: John Backus and his team of programmers at IBM publish a paper describing their newly created FORTRAN programming language, an acronym for FORmula TRANslation, according to MIT .

1958: Jack Kilby and Robert Noyce unveil the integrated circuit, known as the computer chip. Kilby is later awarded the Nobel Prize in Physics for his work.

1968: Douglas Engelbart reveals a prototype of the modern computer at the Fall Joint Computer Conference, San Francisco. His presentation, called "A Research Center for Augmenting Human Intellect" includes a live demonstration of his computer, including a mouse and a graphical user interface (GUI), according to the Doug Engelbart Institute . This marks the development of the computer from a specialized machine for academics to a technology that is more accessible to the general public.

1969: Ken Thompson, Dennis Ritchie and a group of other developers at Bell Labs produce UNIX, an operating system that made "large-scale networking of diverse computing systems — and the internet — practical," according to Bell Labs .. The team behind UNIX continued to develop the operating system using the C programming language, which they also optimized.

1970: The newly formed Intel unveils the Intel 1103, the first Dynamic Access Memory (DRAM) chip.

1971: A team of IBM engineers led by Alan Shugart invents the "floppy disk," enabling data to be shared among different computers.

1972: Ralph Baer, a German-American engineer, releases Magnavox Odyssey, the world's first home game console, in September 1972 , according to the Computer Museum of America . Months later, entrepreneur Nolan Bushnell and engineer Al Alcorn with Atari release Pong, the world's first commercially successful video game.

1973: Robert Metcalfe, a member of the research staff for Xerox, develops Ethernet for connecting multiple computers and other hardware.

1977: The Commodore Personal Electronic Transactor (PET), is released onto the home computer market, featuring an MOS Technology 8-bit 6502 microprocessor, which controls the screen, keyboard and cassette player. The PET is especially successful in the education market, according to O'Regan.

1975: The magazine cover of the January issue of "Popular Electronics" highlights the Altair 8080 as the "world's first minicomputer kit to rival commercial models." After seeing the magazine issue, two "computer geeks," Paul Allen and Bill Gates, offer to write software for the Altair, using the new BASIC language. On April 4, after the success of this first endeavor, the two childhood friends form their own software company, Microsoft.

1976: Steve Jobs and Steve Wozniak co-found Apple Computer on April Fool's Day. They unveil Apple I, the first computer with a single-circuit board and ROM (Read Only Memory), according to MIT .

1977: Radio Shack began its initial production run of 3,000 TRS-80 Model 1 computers — disparagingly known as the "Trash 80" — priced at $599, according to the National Museum of American History. Within a year, the company took 250,000 orders for the computer, according to the book " How TRS-80 Enthusiasts Helped Spark the PC Revolution " (The Seeker Books, 2007).

1977: The first West Coast Computer Faire is held in San Francisco. Jobs and Wozniak present the Apple II computer at the Faire, which includes color graphics and features an audio cassette drive for storage.

1978: VisiCalc, the first computerized spreadsheet program is introduced.

1979: MicroPro International, founded by software engineer Seymour Rubenstein, releases WordStar, the world's first commercially successful word processor. WordStar is programmed by Rob Barnaby, and includes 137,000 lines of code, according to Matthew G. Kirschenbaum's book " Track Changes: A Literary History of Word Processing " (Harvard University Press, 2016).

1981: "Acorn," IBM's first personal computer, is released onto the market at a price point of $1,565, according to IBM. Acorn uses the MS-DOS operating system from Windows. Optional features include a display, printer, two diskette drives, extra memory, a game adapter and more.

1983: The Apple Lisa, standing for "Local Integrated Software Architecture" but also the name of Steve Jobs' daughter, according to the National Museum of American History ( NMAH ), is the first personal computer to feature a GUI. The machine also includes a drop-down menu and icons. Also this year, the Gavilan SC is released and is the first portable computer with a flip-form design and the very first to be sold as a "laptop."

1984: The Apple Macintosh is announced to the world during a Superbowl advertisement. The Macintosh is launched with a retail price of $2,500, according to the NMAH.

1985 : As a response to the Apple Lisa's GUI, Microsoft releases Windows in November 1985, the Guardian reported . Meanwhile, Commodore announces the Amiga 1000.

1989: Tim Berners-Lee, a British researcher at the European Organization for Nuclear Research ( CERN ), submits his proposal for what would become the World Wide Web. His paper details his ideas for Hyper Text Markup Language (HTML), the building blocks of the Web.

1993: The Pentium microprocessor advances the use of graphics and music on PCs.

1996: Sergey Brin and Larry Page develop the Google search engine at Stanford University.

1997: Microsoft invests $150 million in Apple, which at the time is struggling financially. This investment ends an ongoing court case in which Apple accused Microsoft of copying its operating system.

1999: Wi-Fi, the abbreviated term for "wireless fidelity" is developed, initially covering a distance of up to 300 feet (91 meters) Wired reported .

21st century

2001: Mac OS X, later renamed OS X then simply macOS, is released by Apple as the successor to its standard Mac Operating System. OS X goes through 16 different versions, each with "10" as its title, and the first nine iterations are nicknamed after big cats, with the first being codenamed "Cheetah," TechRadar reported.

2003: AMD's Athlon 64, the first 64-bit processor for personal computers, is released to customers.

2004: The Mozilla Corporation launches Mozilla Firefox 1.0. The Web browser is one of the first major challenges to Internet Explorer, owned by Microsoft. During its first five years, Firefox exceeded a billion downloads by users, according to the Web Design Museum .

2005: Google buys Android, a Linux-based mobile phone operating system

2006: The MacBook Pro from Apple hits the shelves. The Pro is the company's first Intel-based, dual-core mobile computer.

2009: Microsoft launches Windows 7 on July 22. The new operating system features the ability to pin applications to the taskbar, scatter windows away by shaking another window, easy-to-access jumplists, easier previews of tiles and more, TechRadar reported .

2010: The iPad, Apple's flagship handheld tablet, is unveiled.

2011: Google releases the Chromebook, which runs on Google Chrome OS.

2015: Apple releases the Apple Watch. Microsoft releases Windows 10.

2016: The first reprogrammable quantum computer was created. "Until now, there hasn't been any quantum-computing platform that had the capability to program new algorithms into their system. They're usually each tailored to attack a particular algorithm," said study lead author Shantanu Debnath, a quantum physicist and optical engineer at the University of Maryland, College Park.

2017: The Defense Advanced Research Projects Agency (DARPA) is developing a new "Molecular Informatics" program that uses molecules as computers. "Chemistry offers a rich set of properties that we may be able to harness for rapid, scalable information storage and processing," Anne Fischer, program manager in DARPA's Defense Sciences Office, said in a statement. "Millions of molecules exist, and each molecule has a unique three-dimensional atomic structure as well as variables such as shape, size, or even color. This richness provides a vast design space for exploring novel and multi-value ways to encode and process data beyond the 0s and 1s of current logic-based, digital architectures."

2019: A team at Google became the first to demonstrate quantum supremacy — creating a quantum computer that could feasibly outperform the most powerful classical computer — albeit for a very specific problem with no practical real-world application. The described the computer, dubbed "Sycamore" in a paper that same year in the journal Nature . Achieving quantum advantage – in which a quantum computer solves a problem with real-world applications faster than the most powerful classical computer — is still a ways off.

2022: The first exascale supercomputer, and the world's fastest, Frontier, went online at the Oak Ridge Leadership Computing Facility (OLCF) in Tennessee. Built by Hewlett Packard Enterprise (HPE) at the cost of $600 million, Frontier uses nearly 10,000 AMD EPYC 7453 64-core CPUs alongside nearly 40,000 AMD Radeon Instinct MI250X GPUs. This machine ushered in the era of exascale computing, which refers to systems that can reach more than one exaFLOP of power – used to measure the performance of a system. Only one machine – Frontier – is currently capable of reaching such levels of performance. It is currently being used as a tool to aid scientific discovery.

What is the first computer in history?

Charles Babbage's Difference Engine, designed in the 1820s, is considered the first "mechanical" computer in history, according to the Science Museum in the U.K . Powered by steam with a hand crank, the machine calculated a series of values and printed the results in a table.

What are the five generations of computing?

The "five generations of computing" is a framework for assessing the entire history of computing and the key technological advancements throughout it.

The first generation, spanning the 1940s to the 1950s, covered vacuum tube-based machines. The second then progressed to incorporate transistor-based computing between the 50s and the 60s. In the 60s and 70s, the third generation gave rise to integrated circuit-based computing. We are now in between the fourth and fifth generations of computing, which are microprocessor-based and AI-based computing.

What is the most powerful computer in the world?

As of November 2023, the most powerful computer in the world is the Frontier supercomputer . The machine, which can reach a performance level of up to 1.102 exaFLOPS, ushered in the age of exascale computing in 2022 when it went online at Tennessee's Oak Ridge Leadership Computing Facility (OLCF)

There is, however, a potentially more powerful supercomputer waiting in the wings in the form of the Aurora supercomputer, which is housed at the Argonne National Laboratory (ANL) outside of Chicago. Aurora went online in November 2023. Right now, it lags far behind Frontier, with performance levels of just 585.34 petaFLOPS (roughly half the performance of Frontier), although it's still not finished. When work is completed, the supercomputer is expected to reach performance levels higher than 2 exaFLOPS.

What was the first killer app?

Killer apps are widely understood to be those so essential that they are core to the technology they run on. There have been so many through the years – from Word for Windows in 1989 to iTunes in 2001 to social media apps like WhatsApp in more recent years

Several pieces of software may stake a claim to be the first killer app, but there is a broad consensus that VisiCalc, a spreadsheet program created by VisiCorp and originally released for the Apple II in 1979, holds that title. Steve Jobs even credits this app for propelling the Apple II to become the success it was, according to co-creator Dan Bricklin .

- Fortune: A Look Back At 40 Years of Apple

- The New Yorker: The First Windows

- " A Brief History of Computing " by Gerard O'Regan (Springer, 2021)

Sign up for the Live Science daily newsletter now

Get the world’s most fascinating discoveries delivered straight to your inbox.

Timothy is Editor in Chief of print and digital magazines All About History and History of War . He has previously worked on sister magazine All About Space , as well as photography and creative brands including Digital Photographer and 3D Artist . He has also written for How It Works magazine, several history bookazines and has a degree in English Literature from Bath Spa University .

Chinese scientists claim they broke RSA encryption with a quantum computer — but there's a catch

Google's Sycamore quantum computer chip can now outperform the fastest supercomputers, new study suggests

How old is planet Earth?

Most Popular

- 2 Euclid telescope reveals 1st section of largest-ever 3D map of the universe — and there's still 99% to go

- 3 1st wheel was invented 6,000 years ago in the Carpathian Mountains, modeling study suggests

- 4 Doctors no longer recommend 'self-checks' for breast cancer — here's what to know

- 5 Key Atlantic current could collapse soon, 'impacting the entire world for centuries to come,' leading climate scientists warn

The history of computing is both evolution and revolution

Head, Department of Computing & Information Systems, The University of Melbourne

Disclosure statement

Justin Zobel does not work for, consult, own shares in or receive funding from any company or organisation that would benefit from this article, and has disclosed no relevant affiliations beyond their academic appointment.

University of Melbourne provides funding as a founding partner of The Conversation AU.

View all partners

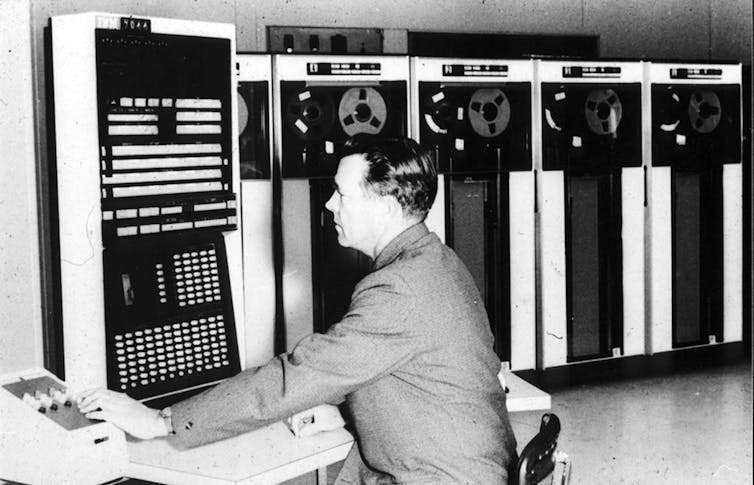

This month marks the 60th anniversary of the first computer in an Australian university. The University of Melbourne took possession of the machine from CSIRO and on June 14, 1956, the recommissioned CSIRAC was formally switched on. Six decades on, our series Computing turns 60 looks at how things have changed.

It is a truism that computing continues to change our world. It shapes how objects are designed, what information we receive, how and where we work, and who we meet and do business with. And computing changes our understanding of the world around us and the universe beyond.

For example, while computers were initially used in weather forecasting as no more than an efficient way to assemble observations and do calculations, today our understanding of weather is almost entirely mediated by computational models.

Another example is biology. Where once research was done entirely in the lab (or in the wild) and then captured in a model, it often now begins in a predictive model, which then determines what might be explored in the real world.

The transformation that is due to computation is often described as digital disruption . But an aspect of this transformation that can easily be overlooked is that computing has been disrupting itself.

Evolution and revolution

Each wave of new computational technology has tended to lead to new kinds of systems, new ways of creating tools, new forms of data, and so on, which have often overturned their predecessors. What has seemed to be evolution is, in some ways, a series of revolutions.

But the development of computing technologies is more than a chain of innovation – a process that’s been a hallmark of the physical technologies that shape our world.

For example, there is a chain of inspiration from waterwheel, to steam engine, to internal combustion engine. Underlying this is a process of enablement. The industry of steam engine construction yielded the skills, materials and tools used in construction of the first internal combustion engines.

In computing, something richer is happening where new technologies emerge, not only by replacing predecessors, but also by enveloping them. Computing is creating platforms on which it reinvents itself, reaching up to the next platform.

Getting connected

Arguably, the most dramatic of these innovations is the web. During the 1970s and 1980s, there were independent advances in the availability of cheap, fast computing, of affordable disk storage and of networking.

Compute and storage were taken up in personal computers, which at that stage were standalone, used almost entirely for gaming and word processing. At the same time, networking technologies became pervasive in university computer science departments, where they enabled, for the first time, the collaborative development of software.

This was the emergence of a culture of open-source development, in which widely spread communities not only used common operating systems, programming languages and tools, but collaboratively contributed to them.

As networks spread, tools developed in one place could be rapidly promoted, shared and deployed elsewhere. This dramatically changed the notion of software ownership, of how software was designed and created, and of who controlled the environments we use.

The networks themselves became more uniform and interlinked, creating the global internet, a digital traffic infrastructure. Increases in computing power meant there was spare capacity for providing services remotely.

The falling cost of disk meant that system administrators could set aside storage to host repositories that could be accessed globally. The internet was thus used not just for email and chat forums (known then as news groups) but, increasingly, as an exchange mechanism for data and code.

This was in strong contrast to the systems used in business at that time, which were customised, isolated, and rigid.

With hindsight, the confluence of networking, compute and storage at the start of the 1990s, coupled with the open-source culture of sharing, seems almost miraculous. An environment ready for something remarkable, but without even a hint of what that thing might be.

The ‘superhighway’

It was to enhance this environment that then US Vice President Al Gore proposed in 1992 the “ information superhighway ”, before any major commercial or social uses of the internet had appeared.

Meanwhile, in 1990, researchers at CERN, including Tim Berners-Lee , created a system for storing documents and publishing them to the internet, which they called the world wide web .

As knowledge of this system spread on the internet (transmitted by the new model of open-source software systems), people began using it via increasingly sophisticated browsers. They also began to write documents specifically for online publication – that is, web pages.

As web pages became interactive and resources moved online, the web became a platform that has transformed society. But it also transformed computing.

With the emergence of the web came the decline of the importance of the standalone computer, dependent on local storage.

We all connect

The value of these systems is due to another confluence: the arrival on the web of vast numbers of users. For example, without behaviours to learn from, search engines would not work well, so human actions have become part of the system.

There are (contentious) narratives of ever-improving technology, but also an entirely unarguable narrative of computing itself being transformed by becoming so deeply embedded in our daily lives.

This is, in many ways, the essence of big data. Computing is being fed by human data streams: traffic data, airline trips, banking transactions, social media and so on.

The challenges of the discipline have been dramatically changed by this data, and also by the fact that the products of the data (such as traffic control and targeted marketing) have immediate impacts on people.

Software that runs robustly on a single computer is very different from that with a high degree of rapid interaction with the human world, giving rise to needs for new kinds of technologies and experts, in ways not evenly remotely anticipated by the researchers who created the technologies that led to this transformation.

Decisions that were once made by hand-coded algorithms are now made entirely by learning from data. Whole fields of study may become obsolete.

The discipline does indeed disrupt itself. And as the next wave of technology arrives (immersive environments? digital implants? aware homes?), it will happen again.

- Computer science

- Computing turns 60

Postdoctoral Research Associate

Project Manager – Contraceptive Development

Editorial Internship

Integrated Management of Invasive Pampas Grass for Enhanced Land Rehabilitation

CHM Revolution

- Exhibition (20)

- Show All (20)

Calculators

Punched cards, analog computers, birth of the computer, early computer companies, real-time computing, mainframe computers, memory & storage, the art of programming, supercomputers, minicomputers, digital logic, artificial intelligence & robotics, input & output.

Computer Graphics, Music, and Art

Computer games, personal computers, mobile computing.

Copyright ©1996-2024 Computer History Museum

IMAGES

VIDEO

COMMENTS

Justin Zobel examines how the computer has changed over 60 years to become what it is today.

In the early twenty-first century, the computer revolution is exemplified by a personal computer linked to the Internet and the World Wide Web. Modern computing, however, is the result of the convergence of three much older technologies—office machinery, mathematical instruments, and telecommunications—all of which were well established in ...

The history of computers goes back over 200 years. At first theorized by mathematicians and entrepreneurs, during the 19th century mechanical calculating machines were designed and built to solve...

The 1970s marked the beginning of the personal computer revolution. Companies like Apple and Microsoft introduced affordable and user-friendly computers, bringing computing power into homes and...

The first computer. By the second decade of the 19th century, a number of ideas necessary for the invention of the computer were in the air. First, the potential benefits to science and industry of being able to automate routine calculations were appreciated, as they had not been a century earlier.

Frequently Asked Questions. How many computer generations are there? Which things were invented in the first generation of computers? What is the fifth generation of computers? What is the latest computer generation?

What has seemed to be evolution is, in some ways, a series of revolutions. But the development of computing technologies is more than a chain of innovation – a process that’s been a hallmark ...

The modern era of digital computers began in the late 1930s and early 1940s in the United States, Britain, and Germany. The first devices used switches operated by electromagnets (relays). Their programs were stored on punched paper tape or cards, and they had limited internal data storage.

Computation Becomes Electronic World War II acted as midwife to the birth of the modern electronic computer. Unprecedented military demands for calculations—and hefty wartime budgets—spurred innovation. Early electronic computers were…

With the invention in 1945 of the stored-program computer, several months after the Second World War ended, and with the publicity surrounding the introduction in 1952 of the first commercial computer (the Universal Automatic Computer, or UNIVAC), the word computer became associated with a machine, rather than a human.