An official website of the United States government

Official websites use .gov A .gov website belongs to an official government organization in the United States.

Secure .gov websites use HTTPS A lock ( Lock Locked padlock icon ) or https:// means you've safely connected to the .gov website. Share sensitive information only on official, secure websites.

- Publications

- Account settings

- Advanced Search

- Journal List

Optimally weighted Z -test is a powerful method for combining probabilities in meta-analysis

Dmitri v zaykin.

- Author information

- Article notes

- Copyright and License information

Correspondence: Dmitri Zaykin, National Institute of Environmental Health Sciences, 111 T.W. Alexander Drive, South Bldg (101); Mail Drop: A3-03, RTP, NC 27709, [email protected] , phone: (919) 541-0096, fax: (919) 541-4311

Issue date 2011 Aug.

The inverse normal and Fisher’s methods are two common approaches for combining P -values. Whitlock demonstrated that a weighted version of the inverse normal method, or “weighted Z -test” is superior to Fisher’s method for combining P -values for one-sided T -tests. The problem with Fisher’s method is that it does not take advantage of weighting and loses power to the weighted Z -test when studies are differently sized. This issue was recently revisited by Chen who observed that Lancaster’s variation of Fisher’s method had higher power than the weighted Z -test. Nevertheless, the weighted Z -test has comparable power to Lancaster’s method when its weights are set to square roots of sample sizes. Power can be further improved when additional information is available. Although there is no single approach that is the best in every situation, the weighted Z -test enjoys certain properties that make it an appealing choice as a combination method for meta analysis.

Keywords: combining P -values, meta-analysis

Introduction

Evolutionary biologists have long used meta-analytic approaches to combine information from multiple studies. When raw data cannot be pooled across studies, meta analysis based on P -values presents a convenient approach that can be nearly as powerful as that based on combining data. Many popular P -value combination methods take the same general form, where P -value for the i -th study, p i , is transformed by some function H , possibly utilizing study-specific weights, w i . Next, a sum is taken, and the combined P -value is computed using the distribution of the resulting statistic, T = Σ w i H ( p i ). For example, Stouffer’s (also known as “inverse normal”) method ( Stouffer et al., 1949 ) takes H to be the inverse normal distribution function. Lipták’s method ( Lipták, 1958 ) is Stouffer’s method with weights; this method is commonly referred to as the weighted Z -test. Fisher’s method ( Fisher, 1932 ) sets H ( p i ) = −2 ln( p i ). The binomial test ( Wilkinson, 1951 ) counts the number of P -values that are below a threshold α , in which case H is the indicator function, H ( p i ) = I ( p i ≤ α ). Truncated P -value methods ( Zaykin et al., 2002 ) add up P -values that fall below a threshold α by setting H ( p i ) = Σ I ( p i ≤ α ) ln( p i ). Combined P -value can be used in support for a common hypothesis tested in all studies, and a series of non-significant results may collectively suggest significance.

Carefully chosen weights can, in general, improve power of combination methods. A motivation for the weighting may follow from the fact that different studies might be differently powered, and that should be reflected by the weighting. Consider the combined P -value of the weighted Z -test:

where Z i = Φ −1 (1 − p i ); p i is a P -value for the i -th study of k studies in total, w i are weights, and Φ, Φ −1 denote the standard normal cumulative distribution function and its inverse. Lipták suggested that the weights in this method “ should be chosen proportional to the ‘expected’ difference between the null hypothesis and the real situation and inversely proportional to the standard deviation of the statistic used in the i-th experiment ” and further suggested that when nothing else is available but the sample sizes of the studies ( n i ), then the square root of n i can be used as a weight ( Lipták, 1958 ). Won et al. verified Lipták’s claim more formally by showing that his test has optimal power when weights are set to the expected difference (i.e. the effect size) over the known or the estimated standard error ( Won et al., 2009 ). This method of weighting requires knowledge of anticipated effect sizes for all combined studies, which is rarely available. Weightings by the estimated standard error or by the square root of sample size are more feasible in practice.

When different samples are taken from similar populations, a model that assumes a common effect size and direction among samples is appropriate. The ideal approach in this case is to pool raw data from all samples and to conduct a single statistical test. Whitlock considered such a test with its P -value and evaluated how well a combined P -value approximates this “true” P -value ( Whitlock, 2005 ). He evaluated Fisher’s method for combining P -values ( Fisher, 1932 ) as well as the unweighted and weighted Z -tests, using one-sided P -values. Indeed, Whitlock found via simulation experiments that a weighted version of the combination Z -test outperformed both Fisher’s and Stouffer’s methods. Nevertheless, weighted versions of Fisher’s method exist and it had remained unclear whether the power of a weighted version of Fisher’s method may be as powerful as that of the weighted Z -test. This issue was recently taken on by Chen who found that Lancaster’s generalization of Fisher’s test was more powerful than the weighted Z -test ( Chen, 2011 ). In Chen’s application, P -values were transformed to chi-square variables by an inverse chi-square transformation with the degrees of freedom equal to the sample size of the study, i.e. Lancaster’s statistic is T = ∑ [ χ ( n i ) 2 ] − 1 ( 1 − p i ) with the distribution T ∼ χ ( ∑ n i ) 2 .

Both Whitlock and Chen used non-optimal weights for the weighted Z -test, setting them to the sample sizes of the studies. The original Whitlock’s conclusions are valid, but the weights need to be adjusted according to suggestions by Lipták and Won et al. In Whitlock’s setup, samples that corresponded to different studies were drawn from the same population. In this setup, the T -test based on pooled raw data can be viewed as an “ideal” test. In this case, optimal weights for the weighted Z method are given by the square root of the sample sizes, n i . These weights are optimal in the sense that the combined P -value approximates the P value of the test based on raw data. This can be seen from writing out a Z statistic based on pooled raw data in terms of statistics for the individual studies. The pooled data statistic is Z total = n T T ¯ / S ^ T , where T̄ is the sample average for the total sample of size n T and Ŝ T is the sample standard deviation. Suppose that we split the sample into two parts of sizes n X , n Y and calculate sample means ( X̄ , Ȳ ) and standard deviations ( Ŝ X , Ŝ Y ) separately for these two samples. We can write the pooled statistic in terms of the two means as

while the weighted statistic that combines information from the two samples is

The pieces n X X ¯ S ^ X and n Y Y ¯ S ^ Y can be recovered from P -values for the two samples by the inverse normal transformation. This statistic is the weighted Z -test for combining P -values. We can see that Z w approximates Z total when the weights w X , w Y are set to n X , n Y . The same argument holds for more than two samples. Regarding Lancaster’s method, Chen noted cautiously that setting degrees of freedom to the sample size of the i -th study “may not be optimal”. It is an optimal weighting however for his simulation setup, where samples are obtained from the same population. This follows from the fact that the chi-square distribution for the i -th statistic approaches a normal distribution with the variance 2 n i : when the degrees of freedom are set to n i , the variance of the i -th statistic in Lancaster’s method is proportional to the variance of the corresponding term for the optimally weighted Z -test. Thus, power advantage of Lancaster’s method over the weighted Z method observed by Chen was at least to some degree due to the usage of non-optimal weights for the Z method. As I will verify by simulation experiments, power of the optimally weighted Z method at conventional 1% and 5% levels is very similar to that of Lancaster’s method.

Chen chose Lancaster’s method in favor of an extension of Fisher’s test where weighted inverse chi-square-transformed P -values are added, for the reason that “the distribution of the sum of weighted χ 2 is usually unknown”. Several algorithms for obtaining this distribution have been published however, and are freely available. Duchesne and Lafaye De Micheaux recently described an R package that implements several approximations to that distribution as well as “exact” algorithms with guaranteed, user-controlled precision ( Duchesne & Lafaye De Micheaux, 2010 ). The weighted Fisher’s test is a direct χ 2 -based analogue of the weighted Z -test. Therefore, I included this method into comparisons. Specifically, the weighted Fisher’s test (the weighted χ 2 test) is based on the distribution of the following statistic:

where [ χ ( 2 ) 2 ] − 1 is the inverse cumulative chi-square distribution function with two degrees of freedom.

For simulation experiments I followed the setup of Chen and Whitlock. I assumed a T -test for the null hypothesis H 0 : μ > 0 and values of μ from 0 to 0.1 with an increment of 0.01. For eight studies with sample sizes n i of 10,20,40,80,160,320,640, and 1280, random samples were obtained assuming a normal distribution with the mean μ and the variance of one. As in Chen, power values were computed for two significance levels, α = 0.01 and α = 0.05. Weightings by n i , by the inverse of the estimated standard error ( 1 / SE i ^ ), and by the standardized effect size, ( μ / SE i ^ ) were considered. The number of simulations was 30,000. Tukey’s plots ( Tukey, 1977 ) showing correspondence of combined and “true” P -values (i.e. obtained from a statistic on pooled data) were obtained for μ = 0 and μ = 0.05. In Tukey’s plots, ( X + Y )/2 is plotted against Y − X . Large spread on the plot indicates discrepancy between the X and Y values. Combined P -value for the weighted Fisher’s method ( Equation 2 ) was obtained with a function from R package CompQuadForm ( Duchesne & Lafaye De Micheaux, 2010 ) that implements Farebrother’s “exact” method ( Farebrother, 1984 ). In addition, I considered three scenarios with study heterogeneity. In the first scenario, μ value for the i -th study was randomly drawn without replacement from the vector of values (0.01, 0.02, …, 0.1) for each simulation run. In the second scenario, μ was assumed fixed (0.07), and the standard deviation value for the i -th study ( σ i ) at every simulation step was drawn without replacement from eight values that were equally spaced, starting from 3 / 4 to 2 3 / 4 . In the third scenario, both, μ i and σ i were randomly drawn for each simulation run.

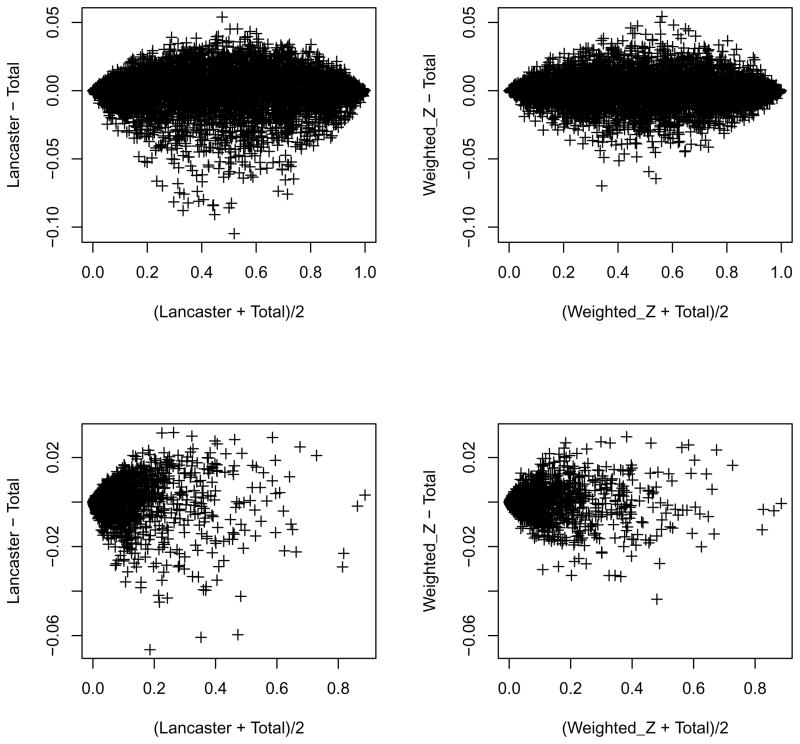

Tables 1 and 2 present power values for the studied tests. Table 1 that followed the setup of Whitlock and Chen shows that the weighted Z test with weights n i , 1 / SE i ^ , Lancaster’s method, and the test based on pooled data all have nearly identical power. The weighted Fisher’s test has a slightly lower power. Table 2 shows power values for heterogeneity scenarios as well as type-I error rates for the case μ = 0 but with a random, study-specific variance. The total T test is no longer most powerful in this case, due to heterogeneity of effects. Weighting by either n i or by 1 / SE i ^ delivers the same improvement in power when only the means are heterogeneous between studies. When there is heterogeneity of the variances, weighting by 1 / SE i ^ yields a power advantage over weighting by n i . Power is the highest when standardized effects ( μ / SE i ^ ) as used as weights. Correlations between the true and the combined P -values were found to be at least 99% for all values of μ for Lancaster’s and the weighted Z methods. The corresponding correlation for the weighted Fisher’s method was lower, ranging from about 91% to 94% depending on the value of μ . Tukey’s plots in Figure 1 show a good correspondence of P -values for the pooled data test with P -values for Lancaster’s and the n i –weighted Z methods. Lancaster’s method forms a more “snowy” cloud and the weighted Z method P -values are somewhat closer to the true values.

Power assuming a common μ value for all samples

Type-I error and power assuming heterogeneous μ and σ 2 values

Tukey’s plots of P -values for Lancaster’s and the n i –weighted Z -test vs. the total T test. Top row: μ = 0. Bottom row: μ = 0.05.

Meta-analysis of P -values generally benefits from weighting. When samples are obtained from the same or similar populations, as in the model studied by Whitlock and Chen, the optimal weights for the Z -test are given by n i . In this case, the weighted Z -test, Lancaster’s test and the test based on pooled data provide very similar power. This is expected, because Lancaster’s method approaches the weighted Z method asymptotically, as min( n i ) increases. When there is heterogeneity of variances, but the true mean is the same across studies, weighting by 1 / SE i ^ is optimal, but the gain in power is not great, compared to weighting by n i (0.784 vs. 0.743 at α =5%). To an extent, power increase is small because of the large range of sample sizes. A constant sample size of n =289 would have given the powers of 0.801 vs. 0.743 respectively, for the same assumed heterogeneity of variances, max ( σ 2 )/min ( σ 2 )=13.4. When there is heterogeneity of means, Z -test that uses standardized effect sizes as weights has the largest power ( Lipták, 1958 ; Won et al., 2009 ), however an application of this test requires the knowledge of μ . Note that this value needs to be pre-specified: plugging in an estimate μ̂ obtained from the same data that was used to compute P -values would invalidate the combination test.

In this study, one-sided P -values were assumed. Such P -values are appropriate for meta-analytic combination of P -values from several studies. Two-sided P -values are generally inappropriate, because they are oblivious to the effect direction. Two-sided P -values from two studies in which the effect direction is flipped can both be small nevertheless, resulting in an inappropriately small combined P -value. On the other hand, combined result of corresponding one-sided P -values will properly reflect cancellation of the pooled effect that would have been observed if raw data from the two studies were combined.

Despite the fact that the mechanics of the meta-analytic process involves manipulation of one-sided P -values, it is often the case that the final result needs to be a two-sided P -value. For example, when allele frequencies are compared between two groups of individuals classified based on the presence or absence of a trait, the null hypothesis is usually that the frequency is the same, and the alternative hypothesis does not specify a particular effect direction. The weighted Z -test provides an important advantage in dealing with this situation, due to symmetry of the normal transformation. There are two possible one-sided combined P -values for each assumed effect direction, but with the weighted Z method, the combined P -value for the first assumed direction is the same distance from 1 / 2 as the combined P -value for the second assumed direction. Therefore, one can arbitrarily assume either one of the two directions when computing one-sided P -values, and obtain a combined one-sided P -value, p one-sided . The two-sided combined P -value is the same regardless of the assumed direction:

What if available individual P -values are all two-sided? Often, studies report P -values that correspond to statistics such as | T | and | Z |, or its squared value, i.e. the one degree of freedom chi-square. These individual P -values can be converted to one-sided before combining as follows:

Once again, the assumed effect direction can be chosen arbitrarily. For example, in testing for association of an allele with a trait at a biallelic locus A / a , we can arbitrarily choose one of the alleles, e.g. allele A . Then the “effect direction” for i -th study is positive if there is positive correlation of that allele with the presence of the trait in that study. Once these one-sided P -values are combined, the result can be converted back to two-sided by Equation (3) .

Another advantage of the weighted Z test is that it can be easily extended to account for the case of correlated statistics between studies. For the test to be valid under independence, we need an assumption that the set of { Z i } jointly follows a multivariate normal distribution under the null hypothesis. If cor( Z i , Z j ) = r ij , the modification amounts to replacing the denominator in Equation (1) with ∑ w i 2 + 2 ∑ i < j w i w j r i j . The multivariate normal assumption is often justified asymptotically, and in certain situations the correlations { r ij } are known. For example, when each Z i is a result of comparing group i of sample size n i to a common “control” group of sample size n 0 by the two-sample T -test, then r i j = [ 1 / ( 1 + n 0 / n i ) ] [ 1 / ( 1 + n 0 / n j ) ] ( Dunnett, 1955 ). In principle, a variation of the weighted Fisher’s method can be extended to this situation, if we can assume that chi-square statistics formed from individual P -values can be represented by squares of underlying multivariate normal variables with correlations r ij . However, required computations are more involved. First, the two degree of freedom chi-square transformation in Equation (2) would have to be replaced with the one degree of freedom transformation. Then one would need to compute eigenvalues of diag ( w ) ( R ∘ R ) diag ( w ) T , where w is the vector of weights and R ◦ R is the matrix of squared correlations. Finally, to compute the combined P -value, one can use the fact that the weighted sum of these correlated chi-squares can be represented by the sum of independent weighted chi-squares with weights given by the above eigenvalues ( Box, 1954 ). Thus, one can use the observed weighted sum of correlated chi-squares with weights substituted by the eigenvalues as an input to a routine for computing the cumulative distribution of the sum of independent weighted chi-squares.

Although there is no single method for combining P -values that is most powerful in all situations, a meta analytic setup considered by Whitlock and extended here to include study heterogeneity is quite general, because many forms of one-sided statistics approach a normal distribution asymptotically. Therefore, the n i – or 1 / SE i ^ –weighted Z -test for combining one-sided P -values can be recommended in most situations.

In this study, the weighted Fisher’s method showed slightly smaller power values compared to other methods in this study. If absolute values or squares of T -statistics for each study were assumed instead, as in calculation of two-sided tests, the weighted Fisher’s would have yielded higher power values than either Lancaster’s or the weighted Z methods. As already noted, combining individual two-sided P -values is generally not appropriate in meta-analysis, where the same hypothesis is tested in all studies. Combination of two sided P -values is more appropriate when individual tests are concerned with separate hypotheses. Small combined P -value in that case can be interpreted as evidence that one or more individual null hypotheses are false. Owing to the virtue of being sensitive to small P -values, the weighted Fisher’s method would provide good power, especially in those situations where there is pronounced heterogeneity of effect sizes between studies.

Acknowledgments

This research was supported by the Intramural Research Program of the NIH, National Institute of Environmental Health Sciences. I wish to express my appreciation for comments and suggestions by Professors Michael Whitlock and Allen Moore, and by an anonymous reviewer.

- Box GEP. Some theorems on quadratic forms applied in the study of analysis of variance problems, I. Effect of inequality of variance in the One-Way classification. The Annals of Mathematical Statistics. 1954;25:290–302. [ Google Scholar ]

- Chen Z. Is the weighted z-test the best method for combining probabilities from independent tests? Journal of Evolutionary Biology. 2011;24:926–930. doi: 10.1111/j.1420-9101.2010.02226.x. [ DOI ] [ PubMed ] [ Google Scholar ]

- Duchesne P, Lafaye De Micheaux P. Computing the distribution of quadratic forms: Further comparisons between the Liu-Tang-Zhang approximation and exact methods. Computational Statistics & Data Analysis. 2010;54:858–862. [ Google Scholar ]

- Dunnett CW. A multiple comparison procedure for comparing several treatments with a control. J Am Stat Assoc. 1955;50:1096–1121. [ Google Scholar ]

- Farebrother RW. Algorithm AS 204: The distribution of a positive linear combination of χ2 random variables. Applied Statistics. 1984;33:332–339. [ Google Scholar ]

- Fisher R. Statistical methods for research workers. Oliver and Boyd; Edinburgh: 1932. [ Google Scholar ]

- Lipták T. On the combination of independent tests. Magyar Tud Akad Mat Kutato Int Közl. 1958;3:171–196. [ Google Scholar ]

- Stouffer S, DeVinney L, Suchmen E. The American soldier: Adjustment during army life. Vol. 1. Princeton University Press; Princeton, US: 1949. [ Google Scholar ]

- Tukey J. Exploratory data analysis. Addison-Wesley; Boston, Massachusetts, US: 1977. [ Google Scholar ]

- Whitlock MC. Combining probability from independent tests: the weighted Z-method is superior to Fisher’s approach. Journal of Evolutionary Biology. 2005;18:1368–1373. doi: 10.1111/j.1420-9101.2005.00917.x. [ DOI ] [ PubMed ] [ Google Scholar ]

- Wilkinson B. A statistical consideration in psychological research. Psychological Bulletin. 1951;48:156–158. doi: 10.1037/h0059111. [ DOI ] [ PubMed ] [ Google Scholar ]

- Won S, Morris N, Lu Q, Elston R. Choosing an optimal method to combine P-values. Statistics in medicine. 2009;28:1537–1553. doi: 10.1002/sim.3569. [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- Zaykin D, Zhivotovsky L, Westfall P, Weir B. Truncated product method for combining P-values. Genetic Epidemiology. 2002;22:170–185. doi: 10.1002/gepi.0042. [ DOI ] [ PubMed ] [ Google Scholar ]

- View on publisher site

- PDF (871.1 KB)

- Collections

Similar articles

Cited by other articles, links to ncbi databases.

- Download .nbib .nbib

- Format: AMA APA MLA NLM

Add to Collections

The use of weighted Z-tests in medical research

Affiliation.

- 1 Sanofi-aventis, Bridgewater, New Jersey, USA. [email protected]

- PMID: 16022168

- DOI: 10.1081/BIP-200062284

Traditionally the un-weighted Z-tests, which follow the one-patient-one-vote principle, are standard for comparisons of treatment effects. We discuss two types of weighted Z-tests in this manuscript to incorporate data collected in two (or more) stages or in two (or more) regions. We use the type A weighted Z-test to exemplify the variance spending approach in the first part of this manuscript. This approach has been applied to sample size re-estimation. In the second part of the manuscript, we introduce the type B weighted Z-tests and apply them to the design of bridging studies. The weights in the type A weighted Z-tests are pre-determined, independent of the prior observed data, and controls alpha at the desired level. To the contrary, the weights in the type B weighted Z-tests may depend on the prior observed data; and the type I error rate for the bridging study is usually inflated to a level higher than that of a full-scale study. The choice of the weights provides a simple statistical framework for communication between the regulatory agency and the sponsor. The negotiation process may involve practical constrains and some characteristics of prior studies.

- Bayes Theorem

- Data Interpretation, Statistical*

- Drug Therapy

- Research Design*

- Sample Size

IMAGES